文章目录

- 1. 原理

- 2. pytorch 源码,只是测试版,后续持续优化

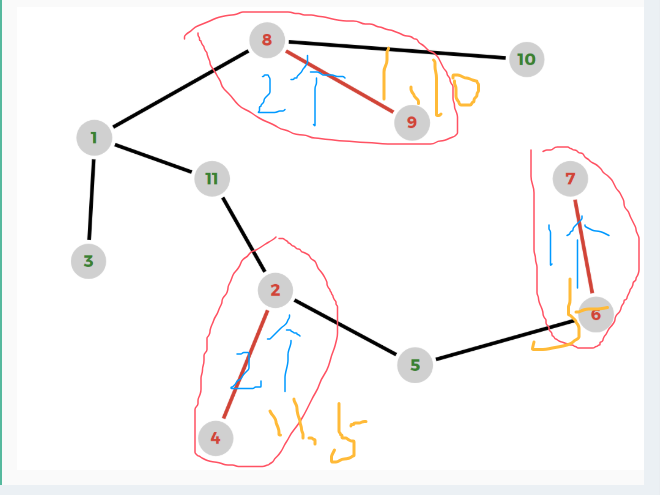

1. 原理

根据如下公式,简单的手写实现单层的RNN神经网络,加强代码功能和对网络的理解能力

2. pytorch 源码,只是测试版,后续持续优化

import torch

import torch.nn as nn

import torch.nn.functional as F

torch.set_printoptions(precision=3, sci_mode=False)

torch.manual_seed(23435)

if __name__ == "__main__":

run_code = 0

input_size = 4

hidden_size = 3

num_layers = 1

batch_first = True

single_rnn = nn.RNN(input_size=input_size, hidden_size=hidden_size, num_layers=num_layers, batch_first=batch_first)

print(single_rnn)

for name in single_rnn.named_parameters():

print(name)

single_rnn_weight_ih_l0 = single_rnn.weight_ih_l0

single_rnn_weight_hh_l0 = single_rnn.weight_hh_l0

single_rnn_bias_ih_l0 = single_rnn.bias_ih_l0

single_rnn_bias_hh_l0 = single_rnn.bias_hh_l0

# print(f"single_rnn_weight_ih_l0=\n{single_rnn_weight_ih_l0}")

# input --> batch_size,seq_len,feature_map

in_batch_size = 1

in_seq_len = 2

in_feature_map = input_size

input_matrix = torch.randn(in_batch_size, in_seq_len, in_feature_map)

output_matrix, output_hn = single_rnn(input_matrix)

print(f"output_matrix=\n{output_matrix}")

print(f"output_hn=\n{output_hn}")

test_output0 = input_matrix @ single_rnn_weight_ih_l0.T + single_rnn_bias_ih_l0

ht_1 = torch.zeros_like(test_output0)

print(f"ht_1=\n{ht_1}")

print(f"ht_1.shape=\n{ht_1.shape}")

test_output1 = ht_1 @ single_rnn_weight_hh_l0.T + single_rnn_bias_hh_l0

test_output = torch.tanh(test_output1 + test_output0)

ht_1[:,1, :] = test_output[:,0, :]

test_output1 = ht_1 @ single_rnn_weight_hh_l0.T + single_rnn_bias_hh_l0

test_output = torch.tanh(test_output1 + test_output0)

print(f"test_output=\n{test_output}")

print(f"test_output.shape=\n{test_output.shape}")

- 结果:经计算,通过pytorch官方的API输出的结果和自定义的结果一致!!!

RNN(4, 3, batch_first=True)

('weight_ih_l0', Parameter containing:

tensor([[ 0.413, 0.044, 0.243, 0.171],

[-0.093, 0.250, -0.499, -0.450],

[-0.571, 0.220, 0.464, -0.154]], requires_grad=True))

('weight_hh_l0', Parameter containing:

tensor([[-0.403, 0.165, -0.244],

[ 0.216, -0.511, -0.441],

[ 0.133, 0.278, -0.211]], requires_grad=True))

('bias_ih_l0', Parameter containing:

tensor([ 0.115, -0.493, 0.555], requires_grad=True))

('bias_hh_l0', Parameter containing:

tensor([-0.309, -0.504, 0.311], requires_grad=True))

output_matrix=

tensor([[[ 0.243, -0.467, -0.554],

[-0.013, -0.802, -0.490]]], grad_fn=<TransposeBackward1>)

output_hn=

tensor([[[-0.013, -0.802, -0.490]]], grad_fn=<StackBackward0>)

ht_1=

tensor([[[0., 0., 0.],

[0., 0., 0.]]])

ht_1.shape=

torch.Size([1, 2, 3])

test_output=

tensor([[[ 0.243, -0.467, -0.554],

[-0.013, -0.802, -0.490]]], grad_fn=<TanhBackward0>)

test_output.shape=

torch.Size([1, 2, 3])